- AIRFLOW XCOM DELETE INSTALL

- AIRFLOW XCOM DELETE UPDATE

- AIRFLOW XCOM DELETE CODE

- AIRFLOW XCOM DELETE PASSWORD

- AIRFLOW XCOM DELETE WINDOWS

Pin Google API client version to fix OAuth issue Sort dag.get_task_instances by execution_date Forbid creation of a variable with an empty key Fix templating bug with DatabricksSubmitRunOperator Fix random failure in test_trigger_dag_for_date DAGs table has no default entries to show Add SSL Config Option for CeleryExecutor w/ RabbitMQ - Add BROKER_USE_SSL config to give option to send AMQP messages over SSL - Can be set using usual airflow options (e.g. Forbid creation of a pool with empty name Forbid event creation with end_data earlier than start_date Speed up _change_state_for_tis_without_dagrun Allowing project_id to have a colon in it. Fix exception while loading celery configurations Removes restriction on number of scheduler threads

AIRFLOW XCOM DELETE UPDATE

Update NOTICE and LICENSE files to match ASF requirements Support imageVersion in Google Dataproc cluster Add clear/mark success for DAG in the UI Support nth weekday of the month cron expression Propagate SKIPPED to all downstream tasks Allow log format customization via airflow.cfg Enable copy function for Google Cloud Storage Hook Fix text encoding bug when reading logs for Python 3.5 Remove outdated docstring on BaseOperator Add GCP dataflow hook runner change to UPDATING.md Add Google Cloud ML version and model operatorsĪIRFLOW-1273] Add Google Cloud ML version and model operators Add Airflow default label to the dataproc operator Check if tasks are backfill on scheduler in a join Add query_uri param to Hive/SparkSQL DataProc operator Google Cloud ML Batch Prediction Operator Fix celery executor parsing CELERY_SSL_ACTIVE However currently the Content-ID (cid) is not passed, so we need to add it Missing AWS integrations on documentation::integrations Increase text size for var field in variables for MySQL

AIRFLOW XCOM DELETE PASSWORD

Save username and password in airflow-pr

AIRFLOW XCOM DELETE INSTALL

add INSTALL instruction for source releases Don't install test packages into python root. Handle pending job state in GCP Dataflow hook Make MySqlToGoogleCloudStorageOperator compaitible with python3 Replace inspect.stack() with sys._getframe() Reset hidden fields when changing connection type Fix XSS vulnerability in Variable endpoint Avoid attribute error when rendering logging filename Add `-celery_hostname` to `airflow worker` Make extra a textarea in edit connections form Only query pool in SubDAG init when necessary Fix invalid obj attribute bug in file_task_handler.py

AIRFLOW XCOM DELETE CODE

Show tooltips for link icons in DAGs view Below is the code that worked for me,this will delete xcom of all tasks in DAG (Add taskid to SQL if xcom of only specific task needs to be deleted): As dagid is dynamic and dates should follow respective syntax of SQL. Add AWS DynamoDB hook and operator for inserting batch items Expose keepalives_idle for Postgres connections Made Dataproc operator parameter names consistent Allow creating GCP connection without requiring a JSON file Make GCSTaskHandler write to GCS on close Add better Google cloud logging documentation Fix typo in scheduler autorestart output filename

AIRFLOW XCOM DELETE WINDOWS

Change test_views filename to support Windows Remove SCHEDULER_RUNS env var in systemd Add copy_expert psycopg2 method to PostgresHook Correctly hide second chart on task duration page triggered dag: airflow triggerdag -conf From airflow UI, delete all the task. Add conn_type argument to CLI when adding connection Note that airflow webserver and airflow scheduler are two completely. Add missing options to SparkSubmitOperator

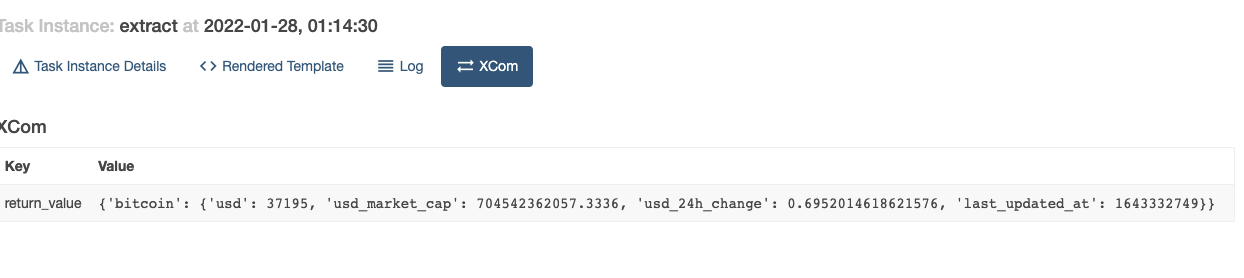

The web interface should not use the experimental API Make experimental API securable without needing Kerberos. S3Hook.load_string didn't work on Python3 To access your XComs in Airflow, go to Admin -> XComs.Fix S3TaskHandler to work with Boto3-based S3Hook The dag id of the dag where the XCom was created.The task id of the task where the XCom was created.You don’t know what I’m talking about? Check my video about how scheduling works in Airflow. That’s how Airflow avoid fetching an XCom coming from another DAGRun. The execution date! This is important! That execution date corresponds to the execution date of the DagRun having generated the XCom.The timestamp is the data at which the XCom was created.If you want to learn more about the differences between JSON/Pickle click here. Notice that serializing with pickle is disabled by default to avoid RCE exploits/security issues. Keep in mind that your value must be serializable in JSON or pickable.

0 kommentar(er)

0 kommentar(er)